Copyright 2021-2023 Lawrence Livermore National Security, LLC and other MuyGPyS Project Developers. See the top-level COPYRIGHT file for details.

SPDX-License-Identifier: MIT

Anisotropic Metric Tutorial

This notebook walks through a simple anisotropic regression workflow and illustrates anisotropic features of MuyGPyS.

[2]:

import numpy as np

from MuyGPyS._test.sampler import UnivariateSampler2D, print_results

from MuyGPyS.gp import MuyGPS

from MuyGPyS.gp.deformation import Anisotropy, Isotropy, l2

from MuyGPyS.gp.hyperparameter import AnalyticScale, Parameter

from MuyGPyS.gp.kernels import Matern

from MuyGPyS.gp.noise import HomoscedasticNoise

from MuyGPyS.gp.tensors import make_predict_tensors, make_train_tensors

from MuyGPyS.neighbors import NN_Wrapper

from MuyGPyS.optimize import Bayes_optimize

from MuyGPyS.optimize.batch import sample_batch

from MuyGPyS.optimize.loss import lool_fn

Matplotlib is building the font cache; this may take a moment.

We will set a random seed here for consistency when building docs. In practice we would not fix a seed.

[3]:

np.random.seed(0)

Sampling a 2D Surface from a Conventional GP

This notebook will use a simple two-dimensional curve sampled from a conventional Gaussian process. We will specify the domain as a simple grid on a one-dimensional surface and divide the observations näively into train and test data.

Feel free to download the source notebook and experiment with different parameters.

First we specify the data size and the proportion of the train/test split.

[4]:

points_per_dim = 60

train_ratio = 0.05

We will assume that the true data is produced with no noise, so we specify a very small noise prior for numerical stability. This is an idealized experiment with effectively no instrument error.

[5]:

nugget_noise = HomoscedasticNoise(1e-14)

We will perturb our simulated observations (the training data) with some i.i.d Gaussian measurement noise.

[6]:

measurement_noise = HomoscedasticNoise(1e-7)

Finally, we will specify a Matérn kernel with hyperparameters. smoothness determines how differentiable the GP prior is. The larger smoothness grows, the smoother sampled functions will become.

[7]:

sim_smoothness = Parameter(1.5)

We will use an anisotropic deformation, where displacement along the dimensions are weighted differently. Each dimension has a corresponding length_scale parameter.

[8]:

sim_length_scale0 = Parameter(0.1)

sim_length_scale1 = Parameter(0.5)

We use all of these parameters to define a Matérn kernel GP and a sampler for convenience. The UnivariateSampler2D class is a convenience class for this tutorial, and is not a part of the library. We will use an anisotropic deformation to ensure that we sample data from the appropriate distribution.

[9]:

sampler = UnivariateSampler2D(

points_per_dim=points_per_dim,

train_ratio=train_ratio,

kernel=Matern(

smoothness=sim_smoothness,

deformation=Anisotropy(

l2,

length_scale0=sim_length_scale0,

length_scale1=sim_length_scale1,

),

),

noise=nugget_noise,

measurement_noise=measurement_noise,

)

Finally, we will sample a curve from this GP prior and visualize it. Note that we perturb the train responses (the values that our model will actual receive) with Gaussian measurement noise. Further note that this is not especially fast, as sampling from a conventional Gaussian process requires computing the Cholesky decomposition of a (data_count, data_count) matrix.

[10]:

train_features, test_features = sampler.features()

[11]:

train_responses, test_responses = sampler.sample()

[12]:

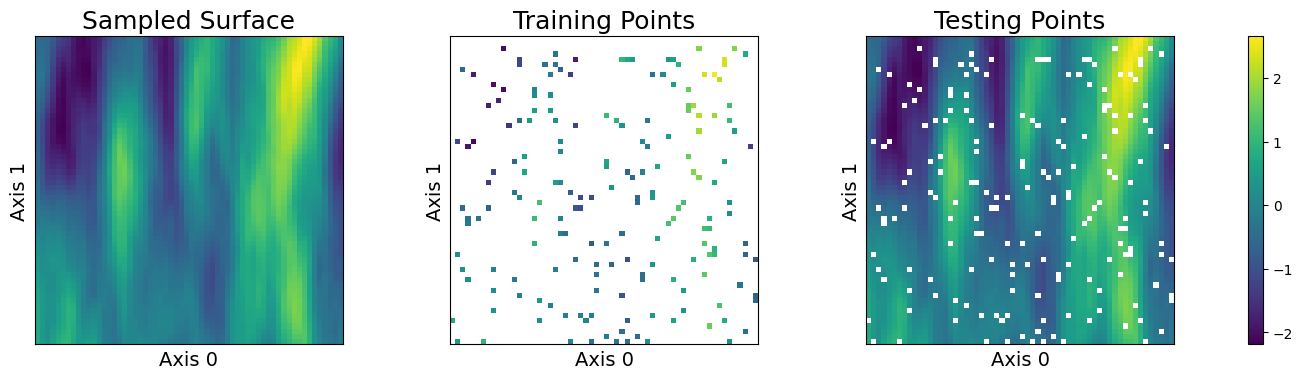

sampler.plot_sample()

We can observe that our choice of anisotropy has caused the globular Gaussian features in the sampled surface to “smear” in the direction of the more heavily weighted axis.

Training an Anisotropic Model

We will not belabor the details covered in the Univariate Regression Tutorial. We must similarly construct a nearest neighbors index and sample a training batch in order to optimize a model.

⚠️ For now, we use isotropic nearest neighbors as we do not have a guess as to the anisotropic scaling. Future versions of the library will use learned anisotropy to modify neighborhood structure during optimization. ⚠️

[13]:

nn_count = 30

nbrs_lookup = NN_Wrapper(train_features, nn_count, nn_method="exact", algorithm="ball_tree")

batch_count = sampler.train_count

batch_indices, batch_nn_indices = sample_batch(

nbrs_lookup, batch_count, sampler.train_count

)

We construct a MuyGPs object with a Matérn kernel. For simplicity, we will fix smoothness and attempt to optimize the two length_scale parameters.

[14]:

muygps_anisotropic = MuyGPS(

kernel=Matern(

smoothness=sim_smoothness,

deformation=Anisotropy(

l2,

length_scale0=Parameter("log_sample", (0.01, 1.0)),

length_scale1=Parameter("log_sample", (0.01, 1.0)),

),

),

noise=measurement_noise,

scale=AnalyticScale(),

)

We will also create and optimze an isotropic model for comparison.

[15]:

muygps_isotropic = MuyGPS(

kernel=Matern(

smoothness=sim_smoothness,

deformation=Isotropy(

l2,

length_scale=Parameter("log_sample", (0.01, 1.0)),

),

),

noise=measurement_noise,

)

We build our difference tensors as usual and use Bayesian optimization.

[16]:

(

batch_crosswise_diffs,

batch_pairwise_diffs,

batch_targets,

batch_nn_targets,

) = make_train_tensors(

batch_indices,

batch_nn_indices,

train_features,

train_responses,

)

Keyword arguments for the optimization:

[17]:

opt_kwargs = {

"loss_fn": lool_fn,

"verbose": True,

"random_state": 1,

"init_points": 5,

"n_iter": 30,

"allow_duplicate_points": True,

}

[18]:

muygps_anisotropic = Bayes_optimize(

muygps_anisotropic,

batch_targets,

batch_nn_targets,

batch_crosswise_diffs,

batch_pairwise_diffs,

**opt_kwargs,

)

parameters to be optimized: ['length_scale0', 'length_scale1']

bounds: [[0.01 1. ]

[0.01 1. ]]

initial x0: [0.07655293 0.30564372]

| iter | target | length... | length... |

-------------------------------------------------

| 1 | 574.8 | 0.07655 | 0.3056 |

| 2 | 440.2 | 0.4229 | 0.7231 |

| 3 | 301.6 | 0.01011 | 0.3093 |

| 4 | 251.9 | 0.1553 | 0.1014 |

| 5 | 458.9 | 0.1944 | 0.3521 |

| 6 | 389.5 | 0.4028 | 0.5434 |

| 7 | 562.3 | 0.1177 | 0.3752 |

| 8 | 273.3 | 0.8317 | 0.6635 |

| 9 | 580.4 | 0.1578 | 0.6325 |

| 10 | 559.3 | 0.09521 | 0.7611 |

| 11 | 125.1 | 0.4017 | 0.1698 |

| 12 | 585.8 | 0.1279 | 0.694 |

| 13 | 283.7 | 0.438 | 0.3585 |

| 14 | 499.2 | 0.04607 | 0.6306 |

| 15 | 373.6 | 0.5577 | 0.705 |

| 16 | 532.7 | 0.157 | 0.4126 |

| 17 | 586.2 | 0.1351 | 0.6988 |

| 18 | 571.2 | 0.228 | 0.8591 |

| 19 | 540.1 | 0.1101 | 0.9515 |

| 20 | 566.1 | 0.2759 | 0.9977 |

| 21 | 499.1 | 0.4303 | 0.9782 |

| 22 | -555.4 | 1.0 | 0.01 |

| 23 | 321.5 | 1.0 | 1.0 |

| 24 | 401.6 | 0.6919 | 1.0 |

| 25 | 569.5 | 0.2273 | 0.8345 |

| 26 | 580.0 | 0.1583 | 0.6301 |

| 27 | 142.7 | 1.0 | 0.4731 |

| 28 | 247.1 | 0.01 | 1.0 |

| 29 | 218.4 | 0.6718 | 0.4214 |

| 30 | 337.0 | 0.4668 | 0.4931 |

| 31 | 269.9 | 0.9954 | 0.7852 |

| 32 | 420.9 | 0.5595 | 0.8803 |

| 33 | 513.7 | 0.3559 | 0.8713 |

| 34 | 575.9 | 0.1804 | 0.9957 |

| 35 | 516.2 | 0.2717 | 0.6678 |

| 36 | 493.2 | 0.1228 | 0.2604 |

=================================================

[19]:

print(f"BayesianOptimization finds an optimimal pair of length scales")

print(f"\tlength_scale0 is {muygps_anisotropic.kernel.deformation.length_scale['length_scale0']()}")

print(f"\tlength_scale1 is {muygps_anisotropic.kernel.deformation.length_scale['length_scale1']()}")

BayesianOptimization finds an optimimal pair of length scales

length_scale0 is 0.13513485791455207

length_scale1 is 0.6987926126715593

Note here that these returned values might be a little different than what we used to sample the surface due to mutual unidentifiability between each other and the scale parameter. However, length_scale0 < length_scale1 as expected.

We also optimize the isotropic benchmark.

[20]:

muygps_isotropic = Bayes_optimize(

muygps_isotropic,

batch_targets,

batch_nn_targets,

batch_crosswise_diffs,

batch_pairwise_diffs,

**opt_kwargs,

)

parameters to be optimized: ['length_scale']

bounds: [[0.01 1. ]]

initial x0: [0.83917142]

| iter | target | length... |

-------------------------------------

| 1 | -3.343e+0 | 0.8392 |

| 2 | -3.484e+0 | 0.4229 |

| 3 | -2.103e+0 | 0.7231 |

| 4 | -151.5 | 0.01011 |

| 5 | -903.6 | 0.3093 |

| 6 | 324.5 | 0.1553 |

| 7 | 249.6 | 0.08109 |

| 8 | 72.52 | 0.2193 |

| 9 | 334.5 | 0.1205 |

| 10 | 338.7 | 0.1267 |

| 11 | 339.6 | 0.1385 |

| 12 | 339.9 | 0.1374 |

| 13 | 327.8 | 0.1144 |

| 14 | 340.2 | 0.1361 |

| 15 | 340.3 | 0.1353 |

| 16 | 340.4 | 0.1335 |

| 17 | 338.4 | 0.1415 |

| 18 | 339.8 | 0.1298 |

| 19 | 340.0 | 0.1303 |

| 20 | 338.3 | 0.1417 |

| 21 | 290.4 | 0.1716 |

| 22 | 339.9 | 0.13 |

| 23 | 340.1 | 0.1306 |

| 24 | 338.6 | 0.141 |

| 25 | 340.0 | 0.1303 |

| 26 | 340.2 | 0.1313 |

| 27 | 339.1 | 0.1401 |

| 28 | 340.0 | 0.1302 |

| 29 | 340.4 | 0.1348 |

| 30 | 339.7 | 0.1292 |

| 31 | 339.3 | 0.1395 |

| 32 | 340.4 | 0.1327 |

| 33 | 339.6 | 0.1289 |

| 34 | 340.0 | 0.137 |

| 35 | 340.3 | 0.1352 |

| 36 | 339.4 | 0.1284 |

=====================================

[21]:

print(f"BayesianOptimization finds that the optimimal isotropic length scale is {muygps_isotropic.kernel.deformation.length_scale()}")

BayesianOptimization finds that the optimimal isotropic length scale is 0.13350784744990493

We separately optimize the scale variance scale parameter for each model.

[22]:

muygps_anisotropic = muygps_anisotropic.optimize_scale(

batch_pairwise_diffs, batch_nn_targets

)

muygps_isotropic = muygps_isotropic.optimize_scale(

batch_pairwise_diffs, batch_nn_targets

)

Inference

As in the Univariate Regression Tutorial, we must realize difference tensors formed from the testing data and apply them to form Gaussian process predictions for our problem.

[23]:

test_count, _ = test_features.shape

indices = np.arange(test_count)

test_nn_indices, _ = nbrs_lookup.get_nns(test_features)

(

test_crosswise_diffs,

test_pairwise_diffs,

test_nn_targets,

) = make_predict_tensors(

indices,

test_nn_indices,

test_features,

train_features,

train_responses,

)

As in the Univariate Regression Tutorial we will evaluate the prediction performance of our models in terms of RMSE, mean diagonal posterior variance, the mean 95% confidence interval size, and the coverage, which ideally should be near 95%.

[24]:

Kcross_anisotropic = muygps_anisotropic.kernel(test_crosswise_diffs)

K_anisotropic = muygps_anisotropic.kernel(test_pairwise_diffs)

predictions_anisotropic = muygps_anisotropic.posterior_mean(

K_anisotropic, Kcross_anisotropic, test_nn_targets

)

variances_anisotropic = muygps_anisotropic.posterior_variance(

K_anisotropic, Kcross_anisotropic

)

confidence_intervals_anisotropic = np.sqrt(variances_anisotropic) * 1.96

coverage_anisotropic = (

np.count_nonzero(

np.abs(test_responses - predictions_anisotropic) < confidence_intervals_anisotropic

) / test_count

)

We also evaluate the isotropic model

[25]:

Kcross_isotropic = muygps_isotropic.kernel(test_crosswise_diffs)

K_isotropic = muygps_isotropic.kernel(test_pairwise_diffs)

predictions_isotropic = muygps_isotropic.posterior_mean(K_isotropic, Kcross_isotropic, test_nn_targets)

variances_isotropic = muygps_isotropic.posterior_variance(K_isotropic, Kcross_isotropic)

confidence_intervals_isotropic = np.sqrt(variances_isotropic) * 1.96

coverage_isotropic = (

np.count_nonzero(

np.abs(test_responses - predictions_isotropic) < confidence_intervals_isotropic

) / test_count

)

Results comparison

A comparison of our trained models reveals that the anisotropic kernel gets close to the true (0.1, 0.5) length scale, whereas the isotropic model has to learn a single parameter that has to split the difference somehow. This results in both a higher RMSE and larger confidence intervals in order to achieve similar coverage.

[26]:

print_results(

test_responses,

("anisotropic", muygps_anisotropic, predictions_anisotropic, variances_anisotropic, confidence_intervals_anisotropic, coverage_anisotropic),

("isotropic", muygps_isotropic, predictions_isotropic, variances_isotropic, confidence_intervals_isotropic, coverage_isotropic),

)

[26]:

| name | smoothness | length scale | noise variance | variance scale | rmse | mean variance | mean confidence interval | coverage |

|---|---|---|---|---|---|---|---|---|

| anisotropic | 1.500000 | [0.13513486 0.69879261] | 0.000000 | 1.997809 | 0.139248 | 0.020384 | 0.241594 | 0.940351 |

| isotropic | 1.500000 | 0.133508 | 0.000000 | 1.000000 | 0.285551 | 0.083645 | 0.514527 | 0.952047 |

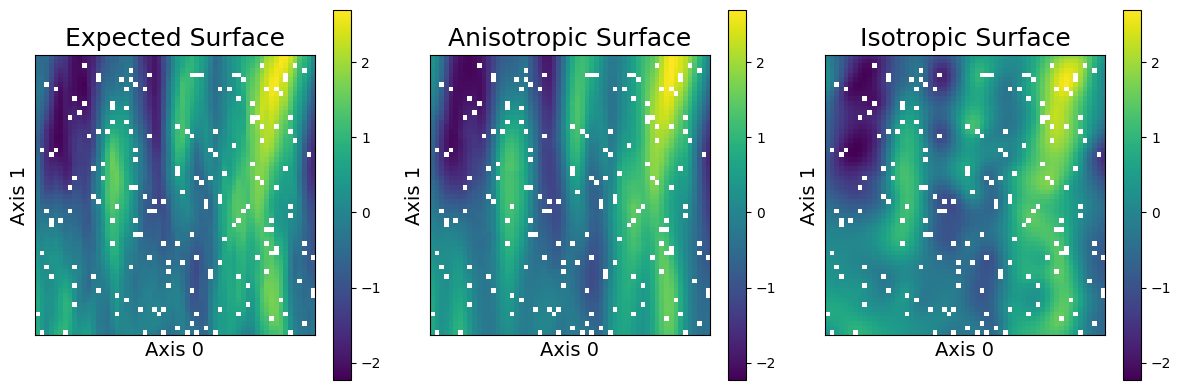

This dataset is low-dimensional so we can plot our predictions and visually evaluate their performance. We plot below the expected (true) surface, and the surface that our model predicts. Note that they are visually similar and major trends are captured, although there are some differences.

[27]:

sampler.plot_predictions(("Anisotropic", predictions_anisotropic), ("Isotropic", predictions_isotropic))

As we can see, the anisotropic model learns a surface that is much visually closer to what is expected. In particular, the isotropic surface has blobby circular features as it to be expected, as it is unable to differentiate between distances along the different axes.

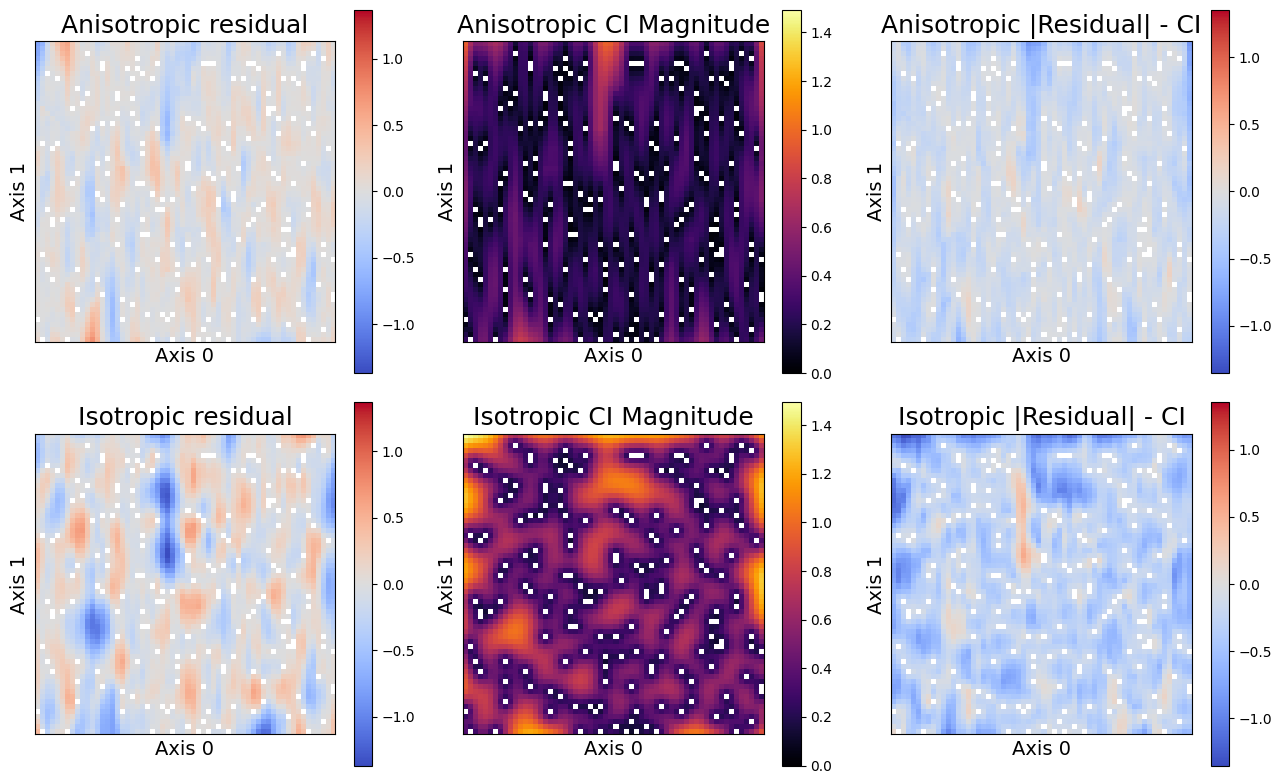

We will also investigate more details information about the errors. Below we produce three plots that help us to understand our results. The left plot shows the residual, which is the difference between the true values and our expectations. The middle plot shows the magnitude of the 95% confidence interval. The larger the confidence interval, the less certain the model is of its predictions. Finally, the right plot shows the difference between the 95% confidence interval length and the magnitude of the residual. All of the points larger than zero (in red) are not captured by the confidence interval. Hence, this plot shows our coverage distribution.

[28]:

sampler.plot_errors(

("Anisotropic", predictions_anisotropic, confidence_intervals_anisotropic),

("Isotropic", predictions_isotropic, confidence_intervals_isotropic),

)

The rightmost columns shows that the anisotropic assumptions both obtains lower residuals, i.e. the posterior means are more accurate. The middle column shows that the the posterior variances (and resulting confidence intervals) are smaller, and therefore the anisotropic model is also more confident in its predictions. Finally, the rightmost plot reveals the uncovered points - all red-scale residuals exceed the confidence interval. Not only does the isotropic model appear to have more uncovered points, they tend to be further outside of the confidence interval than those of the anisotropic model. These results demonstrate the importance of correct model assumptions, both on predictions and uncertainty quantification.